Hi guys,

Yesterday, I was sitting on

my desk and doing usual boaring task ;-). Then I got a seq file containing more than 3

millions records and 5 column. What I have to do is fetch the duplicates

records based on first column. It's quite easy in Nix environment with out

taking help of any tool.

So i thought, I should share

this to you also. Here It comes.....

Be warned that it

is a bad idea to use uniq or any other tool to remove duplicate lines from

files containing financial or other important data. In such cases, a duplicate

line almost always means another transaction for the same amount, and removing

it would cause a lot of trouble for the accounting department. Do not do it! Whatever

you want to do, please keep a copy of original.

Usually 'uniq' is used with

'sort'. Here we will work only in 'uniq' command.

Lets have a look on the

example file.

After Sorting..this will sort

the data

then uniq, this will give the

uniq records from file

what if we need no of

occurance

use -c option --> put no

of occurrence before input row

here, we can see uniq is case

sensitive [ like.. alex or Alex ]

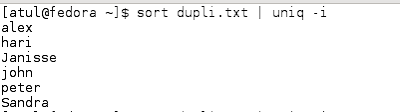

what if we need the case

insensitive uniq records ..

use -i option ---> Case

insensitive uniq

with no of occurrence

Now, the nice part of 'uniq'.

We have 2 most important options

-u option --->

Displays only the unrepeated lines

d option ---> Displays only the repeated lines

Displays only the unrepeated lines with Counts

Displays only the repeated lines with Counts

Hoping this will help you in daily work.

For more options.examples, keep looking for the update...

till then...

njoy the simplicity.......